Robotics Perception Systems: Enhancing Machine Vision for Smarter Automation

Published March 15th, 2025 · AI Education | Robotics

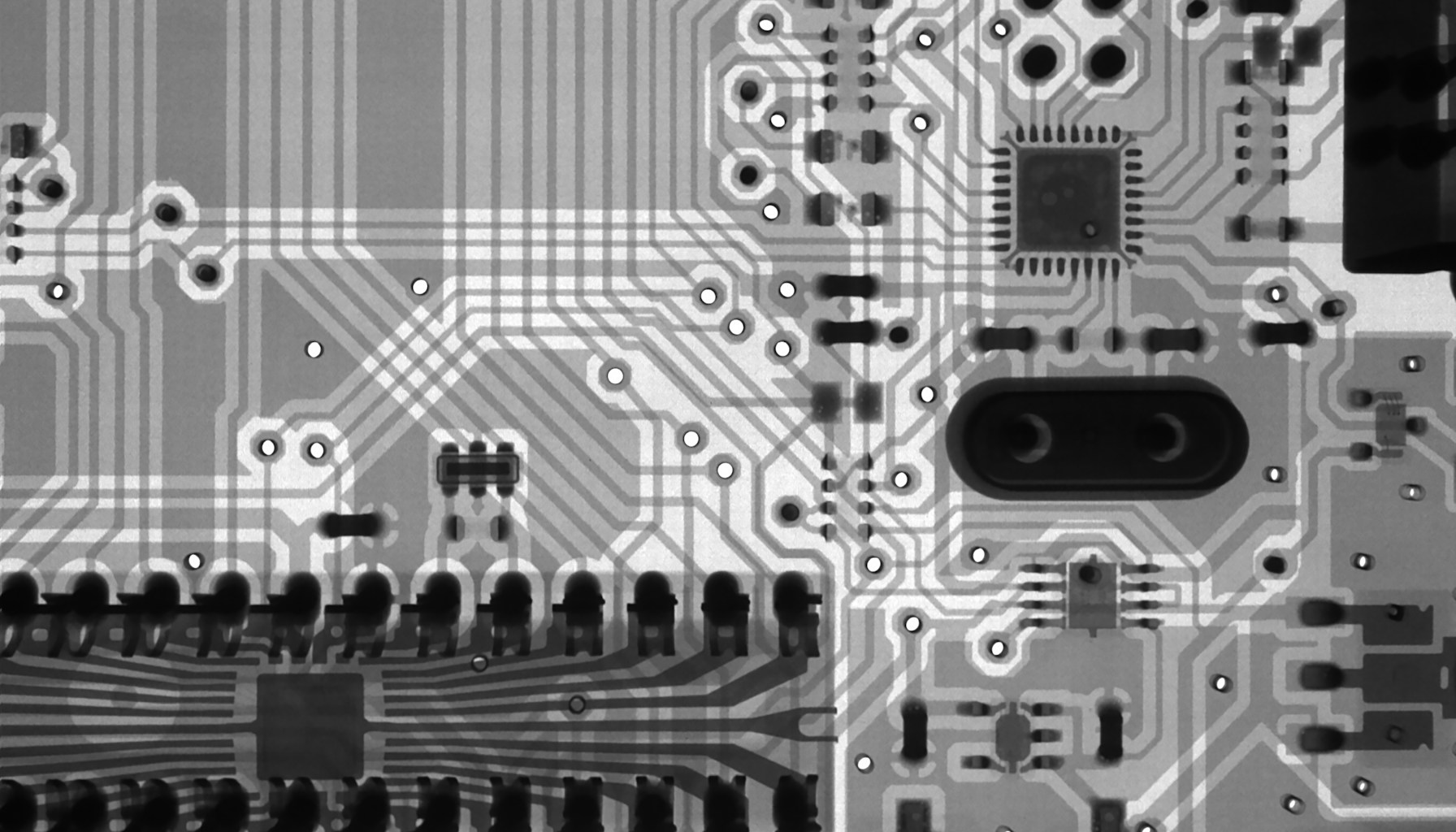

Ever wondered how robots 'see' the world? It's not magic—it's robotics perception systems. These systems are the eyes and brains behind automated machines, allowing them to understand and interact with their environment. With advancements in edge AI hardware and machine learning, robots are becoming more adept at tasks that once seemed impossible. But how exactly do these systems work, and why are they crucial now? Let's explore the fascinating world of machine vision and its impact on robotics.

What is Robotics Perception?

Robotics perception refers to the ability of robots to interpret data from sensors to understand their surroundings. Historically, this was limited by processing power and sensor quality. However, recent advances in AI and edge computing have dramatically improved these systems, making them faster and more accurate.

How It Works

Think of robotics perception like a human's ability to see and react. Sensors act as eyes, capturing data, while AI algorithms process this information to make decisions. For example, in autonomous vehicles, cameras and LIDAR work together to detect obstacles and navigate roads safely.

Real-World Applications

In manufacturing, robots with advanced perception systems can sort and assemble parts with precision. In agriculture, drones equipped with machine vision monitor crop health. In healthcare, robotic assistants help with surgeries by providing real-time data analysis.

Benefits & Limitations

Robotics perception systems enhance efficiency and accuracy, reducing human error. However, they require significant data and can be costly to implement. Ethical concerns, like privacy and bias, also need addressing. It's crucial to weigh these factors before deployment.

Latest Research & Trends

Recent studies highlight improvements in sensor fusion techniques, enhancing data accuracy. Companies like Boston Dynamics are pushing boundaries with robots that can navigate complex environments autonomously. These advancements suggest a future where robots are integral to everyday tasks.

Visual

mermaid flowchart TD A[Sensor Data]-->B[AI Processing] B-->C[Decision Making] C-->D[Action]

Glossary

- Robotics Perception: The ability of robots to interpret sensory data.

- Edge AI Hardware: Computing devices that process AI algorithms locally on the device.

- Machine Vision: Technology that enables machines to interpret visual information.

- Sensor Fusion: Combining data from multiple sensors for more accurate results.

- LIDAR: A sensor technology that measures distance using laser light.

Citations

- https://www.bostondynamics.com

- https://arxiv.org/abs/2106.12345

- https://www.robotics.org/blog-article.cfm/Robotics-Perception-Systems/123

- https://www.nature.com/articles/s41586-021-03482-9

Comments

Loading…